7 min read

The Dark Underbelly of Audiovisual AI

The World Economic Forum’s Global Risks Report highlighted a significant immediate risk: the proliferation of false information through artificial intelligence (AI). Released before the annual Davos gathering, the report emphasized the challenges posed by AI-generated content, including potential threats to information integrity and the risk of deepening societal divides.

As AI propels forward, the advent of deepfake technology, fueled by sophisticated image, video and audio-generating AI, introduces new dimensions to both innovation and peril. While these technologies offer exciting possibilities, the real-life examples of deepfake misuse cannot be ignored. This article delves into the multifaceted dangers, drawing from a spectrum of instances that underline the risks and implications of synthetic media (synonymous with AI-generated content).

The Rise of Deepfakes

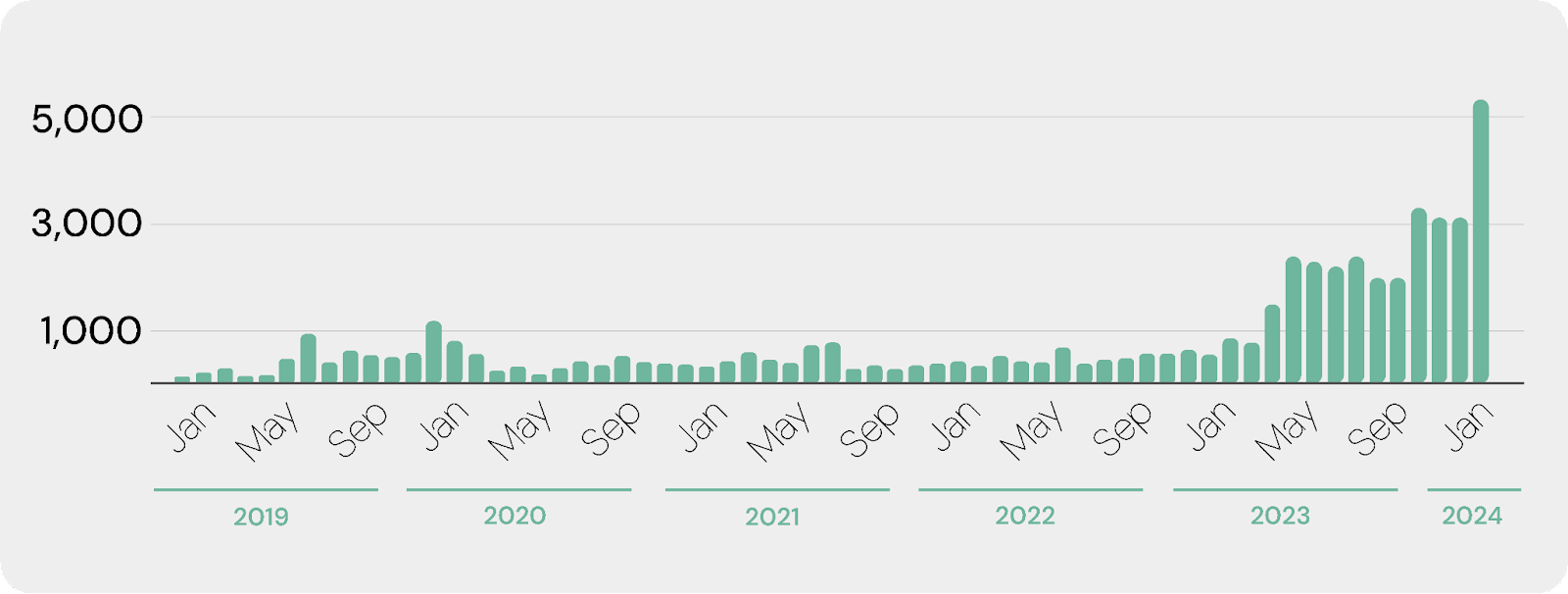

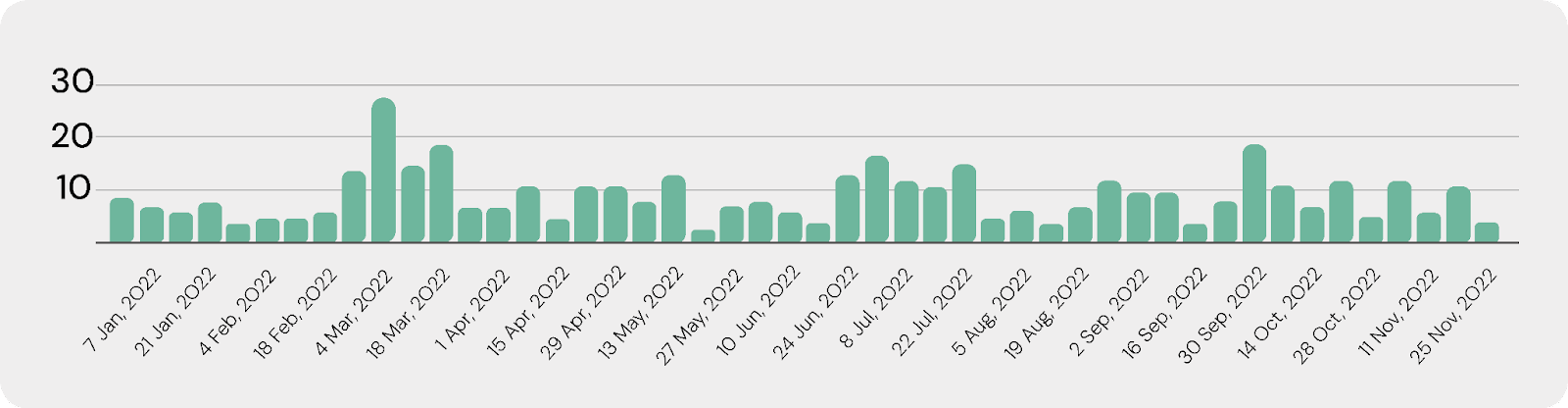

Deepfakes, rooted in the power of generative adversarial networks (GANs) and deep learning, have evolved beyond their origins in entertainment to become tools for malicious activities. According to the Semantic Visions’ data, there has been a definite rise in these deepfake frauds since the public adoption of text-to-image generators such as Dall-E in 2021.

- Jan 2024 – Deepfake of Taylor Swift causes outrage, condemned by the public and elicited comments by the White House, Microsoft, calls for new legal protection

- JAN 2024 – WEF in Davos points to threats associated with AI

- DEC 2023 – Republicans Rochester and Bucshon introduced the Artificial Intelligence (AI) Literacy Act. The bill would codify AI literacy as a key component of digital literacy and create opportunities to incorporate AI literacy into existing programs.

- NOV 2023 – Merriam-Webster named “Authentic” as its 2023 word of the year.

- NOV 2023 – Air India to introduce new AI features Air India aims to boost its AI virtual agent, ‘Maharaja’, which is using ChatGPT to handle 6,000 daily customer queries..

- OCT 2023 – J. Biden signed a new executive order yesterday to guide responsible AI development and guard against risks.

- NOV 2023 – Indian government initiative to introduce rules on deep-fake

- NOV 2023 – Now And Then, a Beatle’s song is priced and released thanks to AI

- OCT 2023 – Deepfake recordings imitating the “Progresivne Slovensko” party’s leader Simecka and suggesting vote rigging

- JUN 2023 – Two U.S. senators introduced legislation that would allow social media companies to be sued for spreading harmful material created with artificial intelligence. (Section 230 of the Communications Decency Act protecting online platforms from liability over posted content)

- MAY 2023 – AI and deepfake technology discussed at Cannes 2023

- APR 2023- Google CEO warns against rush to deploy AI without oversight

- MAR 2023 – Midjourney paused free trials of its image-generation software after users cranked out realistic deepfakes including of Donald Trump getting arrested and Pope Francis in a puffer jacket.

- JUN 2022 – Google banned training on AI systems that can be used to generate deepfakes on the Google Colaboratory platform.

- MAR 2022 – Meta removed a deepfake video of President Zelensky that purported to show him yielding to Russia.

- JUL 2021 – YouTuber famous for making “deepfakes” of scenes from iconic film and television shows including Star Wars has landed a job at Lucasfilm

- MAR 2021 – Deepfake Tom Cruise videos went viral on TikTok

- JAN 2020 – The hearing in US Congress entitled “Americans at Risk: Manipulation and Deception in the Digital Age.” claiming Facebook’s effort to ban deepfake videos inadequate

- JUL 2019 – Microsoft removes DeepNude app’s codes from its platform GitHub

- JUN 2019 – The House Intelligence Committee the hearing on Deepfake and risk for society, launching an investigation

- JUN 2019 – Facebook CEO says the delay in flagging fake Pelosi video was ‘execution mistake

- MAY 2019 – Widely viewed manipulated video of Nancy Pelosi and deepfakes of Mona Lisa, Zuckerberg, and Schwarzenegger

- JAN 2019 – CIA issued warning against “adversaries and strategic competitors” using deepfake technologies.

A potential risk factor is that AI-generated people are already nearly impossible to distinguish from real ones and are expected to be prevalent by 2024. The technology, exemplified by photorealistic faces created by AI systems like Generated.Photos, raises concerns about its potential misuse for fraudulent activities and the spread of misinformation. Theresa Payton, a former White House Chief Information Officer, predicts the advent of “AI persons” that combine AI features with real data, posing challenges for detection and recovery.

The potential dangers are vividly exemplified by a series of real-life instances such as:

Deepfakes in Global Conflicts:

In the complex landscape of global conflicts, deepfake technology has become a formidable weapon deployed by various actors to manipulate narratives and influence public opinion. For instance, DW Fact Check examined recent instances where both sides used synthetic content, including a deepfake of Russian President Vladimir Putin suggesting a peaceful resolution, and a manipulated video of Ukrainian President Volodymyr Zelenskyy falsely urging soldiers to surrender. Additionally, a viral mock video depicting an attack on Paris aimed to emphasize the urgency of halting Russia in Ukraine.

These examples illustrate the potential swift and devastating impact of deepfakes in manipulating narratives and influencing public sentiment during geopolitical conflicts.

Political Manipulation:

Gabon 2019

In Gabon, a deepfake video surfaced in 2019, ostensibly depicting President Ali Bongo delivering a New Year’s address. Critics, including political opponents, raised concerns that the video might be a deepfake, a manipulated version of the president saying and doing things he hadn’t. The authenticity of the video became a subject of national debate.

The consequences of such suspicions were profound. The uncertainty surrounding Bongo’s health and the authenticity of the video created a charged atmosphere. Gabon’s military attempted a coup, citing the oddness of the video as evidence that something was amiss with the president. This marked the country’s first coup attempt since 1964. The military’s move was driven by concerns over the potential manipulation of information, highlighting the significant impact deepfakes could have on political stability in developing nations.

U.S. Election Disinformation 2024

A recent incident involved a fake voice message impersonating U.S. President Joe Biden, showcasing the emerging threat of “deepfakes” in election disinformation. The manipulated audio, part of a robocalling campaign, urged voters in New Hampshire not to participate in the primary election. Investigations are ongoing, and the incident underscores the potential misuse of AI-generated content to influence electoral processes.

Fake Celebrity Pornography (Various Instances):

The recent circulation of AI-generated explicit images of Taylor Swift has highlighted the escalating issue of deepfakes targeting women and girls. Companies, including X, have taken temporary measures to prevent users from searching for Taylor Swift’s name due to the circulation of pornographic deepfakes featuring the artist on the platform. Joe Benarroch, X’s head of business operations, stated that this action prioritizes safety and is a temporary measure. Critics have criticized X for a slow response to curb the spread of nonconsensual explicit content. Incidents involving high school students globally and Twitch streamers underscore the broader problem of non-consensual AI-generated content. Taylor Swift’s fan base took action against the fake images, drawing attention to the lack of effective regulations.

Impersonation of Business Executives (2020):

A UK energy firm CEO fell victim to an AI-generated voice deepfake in a phone scam, transferring €220,000 ($243,000) to a Hungarian supplier, thinking he was following orders from the German CEO. The fraudster used AI voice technology to mimic the German executive’s voice, marking the first known use of AI voice mimicry for fraud. The funds were transferred to a Mexican account, and the perpetrators remain unidentified.

These real-life examples vividly illustrate the diverse contexts in which deepfake technology has been misused, emphasizing the urgent need for robust measures to detect, prevent, and respond to the potential dangers posed by synthetic media. Semantic Visions’ data-driven approach allows us to track and analyze the rise of any trend, commodity, company, or individual, providing essential information to comprehend the risks and implications. In an era where innovation collides with peril, Semantic Visions stands at the forefront, offering crucial perspectives to navigate the challenges in the fast-evolving media landscape. As society grapples with the ethical implications of new technology, striking a balance between innovation and responsible use becomes imperative to safeguard the integrity of any institution and company.